20 Best Tools to Extract Data From Website: Complete Guide for 2025

Top tools to extract and analyze web data effortlessly in 2025

Blogby JanAugust 04, 2025

The average company loses 23 hours weekly to manual data collection from websites. Product managers spend entire afternoons copying competitor pricing. Marketing teams manually track mentions across hundreds of sites. Sales professionals waste mornings researching prospects one LinkedIn profile at a time.

Website data extraction has moved from nice-to-have to business-critical. Companies using automated data extraction achieve 73% faster market research and generate 2.8x more qualified leads than competitors stuck with manual methods. More importantly, they identify opportunities weeks before slower-moving rivals even notice market shifts.

The challenge isn't finding extraction tools - it's choosing solutions that deliver business insights rather than raw data dumps. Most tools require technical expertise that marketing and sales teams don't possess. Others produce spreadsheets full of unstructured information that still requires hours of manual analysis.

In this article we cover 20 website data extraction tools across different use cases, team sizes, and technical requirements. This guide reveals which solutions actually work for real businesses, from AI-powered intelligence platforms that understand context to simple point-and-click tools that anyone can use.

Why Manual Data Collection Is Killing Your Competitive Edge

Modern businesses need information that lives scattered across thousands of websites. Competitor pricing changes daily. Customer reviews reveal product opportunities. Industry news shapes market conditions. Social media conversations influence buying decisions. All of this intelligence exists online, but accessing it systematically requires automation.

Manual collection methods simply cannot operate at the speed modern markets demand. A single competitor analysis involving 20 companies and 50 data points requires 15+ hours of manual work. Multiply that across multiple competitors, product categories, and regular monitoring cycles, and the time investment becomes economically impossible.

The accuracy problem compounds the efficiency challenge. Human data collection introduces errors, inconsistencies, and biases that compromise analysis quality. Different team members extract information differently. Copy-paste errors create inaccuracies. Important updates get missed between collection cycles.

Speed determines competitive advantage in data-driven markets. Companies that identify pricing changes within hours can adjust strategies immediately. Those that discover customer complaints quickly can address issues before they spread. Organizations that spot emerging trends first can capitalize on opportunities while competitors remain unaware.

The most successful businesses treat web data extraction as essential infrastructure, not optional convenience. They automate collection processes to focus human effort on analysis and decision-making rather than repetitive research tasks.

What's Website Data Extraction Technology

Website data extraction technology automates the process of collecting, parsing, and structuring information from web pages for business analysis. These tools simulate human browsing behavior while operating at machine speed and scale.

The extraction process involves four key stages. First, target identification where specific websites and data types are defined based on business requirements. Second, content retrieval through automated browsing that navigates pages, handles authentication, and processes dynamic content. Third, data processing including cleaning, validation, and structure formatting. Finally, delivery through APIs, databases, or exports that integrate with existing business systems.

Modern extraction tools have evolved far beyond simple HTML parsing. Advanced solutions incorporate machine learning algorithms that recognize content patterns, understand data relationships, and adapt to website changes automatically. This intelligence enables extraction of unstructured information like product descriptions, customer sentiment, and competitive positioning.

The technical architecture matters for business results. Simple scrapers break when websites update their layouts. Intelligent platforms adapt automatically. Basic tools require technical configuration for each data type. AI-powered solutions understand business context and extract relevant information without manual setup.

The most effective extraction tools combine multiple technologies: browser automation for JavaScript-heavy sites, machine learning for pattern recognition, natural language processing for content understanding, and proxy management for scale and reliability.

20 Best Tools to Extract Data From Website

1. Databar.ai

Databar.ai turns website data extraction from technical task to business intelligence solution. Unlike traditional scraping tools that require technical configuration, Databar.ai understands natural language queries and extracts information based on business context rather than HTML structures.

What makes Databar.ai stand out is its AI research agent approach. Instead of configuring extraction rules, users describe what information they need in plain English. The platform automatically navigates relevant websites, identifies pertinent data, and structures findings for immediate business analysis.

Key intelligent capabilities include:

- Natural language query processing that understands business context

- Automatic source identification and navigation across multiple websites

- Contextual data extraction that recognizes relationships and patterns

- Chrome extension for seamless extraction during regular browsing

Our users consistently tell us they're saving 15-20 hours weekly on research tasks that used to consume entire afternoons. The platform eliminates technical barriers that prevent most teams from effectively utilizing web data while delivering comprehensive data enrichment capabilities for business intelligence.

Best for: GTM teams who need insights, not spreadsheets - especially non-technical users who want sophisticated capabilities without the learning curve.

Pricing: Plans start at $39/month and scale with your usage.

2. Octoparse

Octoparse delivers comprehensive website data extraction through visual workflow creation that doesn't require programming knowledge. The platform combines ease of use with sophisticated capabilities for handling complex extraction scenarios.

Octoparse's strength lies in its extensive template library containing over 500 pre-built extraction workflows for popular websites and common business scenarios. Users can implement these templates immediately or customize them while maintaining visual workflow management.

Core features include:

- Point-and-click workflow creation with visual interface

- Cloud-based extraction ensuring continuous operation

- Advanced scheduling for automated data collection

- IP rotation and proxy management for reliable access

- Multiple export formats for immediate business integration

The platform handles JavaScript-rendered content, AJAX requests, and complex authentication automatically while maintaining user-friendly operation. Advanced users can implement custom logic through conditional workflows and data transformation rules.

Best for: Business users requiring regular data collection without technical expertise, teams needing reliable automated extraction.

Pricing: Free plan available; paid plans start at $75/month.

3. ParseHub

ParseHub leverages machine learning algorithms to extract data from websites automatically, adapting to layout changes and content variations without manual intervention. The platform combines intelligent recognition with visual workflow creation.

ParseHub's machine learning engine analyzes website structures and identifies data patterns automatically, reducing setup time and maintenance overhead. The system adapts to website modifications while maintaining extraction accuracy and reliability.

Advanced automation features:

- Automatic pattern recognition for similar content across pages

- Intelligent navigation handling pagination and dropdown menus

- Dynamic content processing for JavaScript-heavy applications

- Built-in data validation and quality control mechanisms

- API access for programmatic control and integration

The platform excels for organizations requiring consistent data from frequently changing websites where manual maintenance would be prohibitive. ParseHub's intelligence maintains extraction quality despite website evolution.

Best for: Organizations with frequently changing data sources, teams requiring adaptive extraction capabilities.

Pricing: Free plan available; paid plans start at $149/month.

4. Apify

Apify provides enterprise-grade website data extraction through cloud infrastructure and extensive automation marketplace. The platform offers both ready-made solutions and custom development frameworks for comprehensive data collection.

Apify's marketplace contains over 1,600 pre-built extractors for popular websites and common extraction scenarios. Users can deploy these solutions immediately or modify them for specific requirements while benefiting from community-maintained updates.

Enterprise capabilities include:

- Headless browser automation for complex interactions

- Sophisticated proxy management and anti-bot evasion

- Custom code support through JavaScript and Python

- Comprehensive monitoring and performance analytics

- Scalable cloud infrastructure handling high-volume operations

The platform accommodates both technical and non-technical users through different interface options while providing enterprise-grade reliability and performance for mission-critical data operations.

Best for: Organizations with diverse extraction requirements, teams needing both simplicity and advanced customization.

Pricing: Free tier available; paid plans start at $49/month.

5. ScrapingBee

ScrapingBee specializes in API-driven website data extraction that handles complex technical challenges through managed infrastructure. The service eliminates the complexity of browser automation, proxy management, and anti-bot evasion.

ScrapingBee's API approach simplifies integration while handling sophisticated technical requirements automatically. Developers can implement extraction capabilities through simple REST API calls without managing infrastructure complexity.

Technical automation features:

- Headless browser rendering for JavaScript-heavy applications

- Residential proxy rotation preventing IP blocking

- Automatic CAPTCHA solving and anti-bot evasion

- Custom header support and session persistence

- Screenshot capabilities alongside content extraction

The service maintains high success rates against protected websites through advanced evasion techniques while providing reliable extraction infrastructure for custom applications and integrations.

Best for: Developers requiring reliable extraction APIs, technical teams building custom applications.

Pricing: Free tier with 1,000 calls; paid plans start at $29/month.

6. Import.io

Import.io delivers comprehensive website data extraction through enterprise-grade platforms designed for large-scale, mission-critical operations. The solution combines automated extraction with data processing and business system integration.

Import.io's enterprise focus addresses organizational requirements beyond basic extraction, including compliance monitoring, role-based access controls, and comprehensive audit trails for regulated industries.

Advanced enterprise features:

- Multi-page workflow automation with complex logic

- Real-time data validation and quality assurance

- API integrations with business intelligence platforms

- Automated delivery to databases and applications

- Enterprise security and compliance monitoring

The platform handles complex scenarios including form submissions, authenticated access, and multi-step processes while maintaining data quality through comprehensive validation and cleansing operations.

Best for: Large organizations requiring enterprise-grade extraction with compliance and security features.

Pricing: Custom enterprise pricing based on volume and requirements.

7. Scrapy

Scrapy offers powerful website data extraction through open-source Python framework designed for developers requiring maximum control and customization. The platform provides comprehensive extraction capabilities without licensing constraints.

Scrapy's architecture supports high-performance, scalable operations through concurrent processing, intelligent retry mechanisms, and comprehensive middleware systems for handling complex extraction scenarios.

Developer-focused capabilities:

- Complete customization through Python programming

- Built-in support for robots.txt compliance and ethical extraction

- Pipeline processing for real-time data transformation

- Integration with popular data science and analysis libraries

- Extensive logging and monitoring for production operations

The framework integrates seamlessly with existing Python infrastructure while providing enterprise-grade performance through proper implementation and configuration by experienced development teams.

Best for: Organizations with Python expertise requiring maximum customization, teams building custom extraction solutions.

Pricing: Free open-source software with optional commercial support.

8. Smartlead

Smartlead automates email and contact extraction through AI-powered identification and verification systems. The platform specializes in extracting and validating business contact information from websites and professional directories.

Smartlead's AI algorithms identify and extract contact information from various website formats while automatically validating email deliverability and contact quality through multiple verification methods.

Contact intelligence features:

- AI-powered email discovery from website content

- Automatic contact verification and deliverability testing

- Professional role identification and categorization

- Social profile connection and additional data enrichment

- CRM integration for immediate contact database updates

The platform focuses specifically on sales and marketing use cases where contact quality and deliverability matter more than volume, providing advanced prospecting capabilities for business development teams.

Best for: Sales teams requiring verified contact information, marketing professionals building outreach lists.

Pricing: Plans start at $39/month with contact verification included.

No-Code Solutions for Business Teams

Modern website data extraction has evolved beyond technical tools to include business-friendly platforms that anyone can use effectively. These solutions eliminate programming requirements while delivering professional-grade extraction capabilities.

9. Mozenda

Mozenda provides comprehensive website data extraction through cloud infrastructure specifically designed for business users rather than developers. The platform combines visual workflow creation with enterprise-grade reliability.

Mozenda's business focus includes comprehensive data management beyond extraction, featuring validation, transformation, and integration capabilities that deliver analysis-ready information automatically.

Business-oriented features:

- Visual workflow creation without programming requirements

- Automated data validation and quality control processes

- Multiple export formats compatible with business applications

- Scheduled extraction ensuring consistent data freshness

- Comprehensive monitoring and performance reporting

The platform handles technical challenges automatically while providing business users with control over data collection processes and delivery schedules through intuitive interfaces.

Best for: Business analysts requiring reliable extraction without technical dependencies, organizations preferring managed cloud services.

Pricing: Plans start at $99/month with enterprise options available.

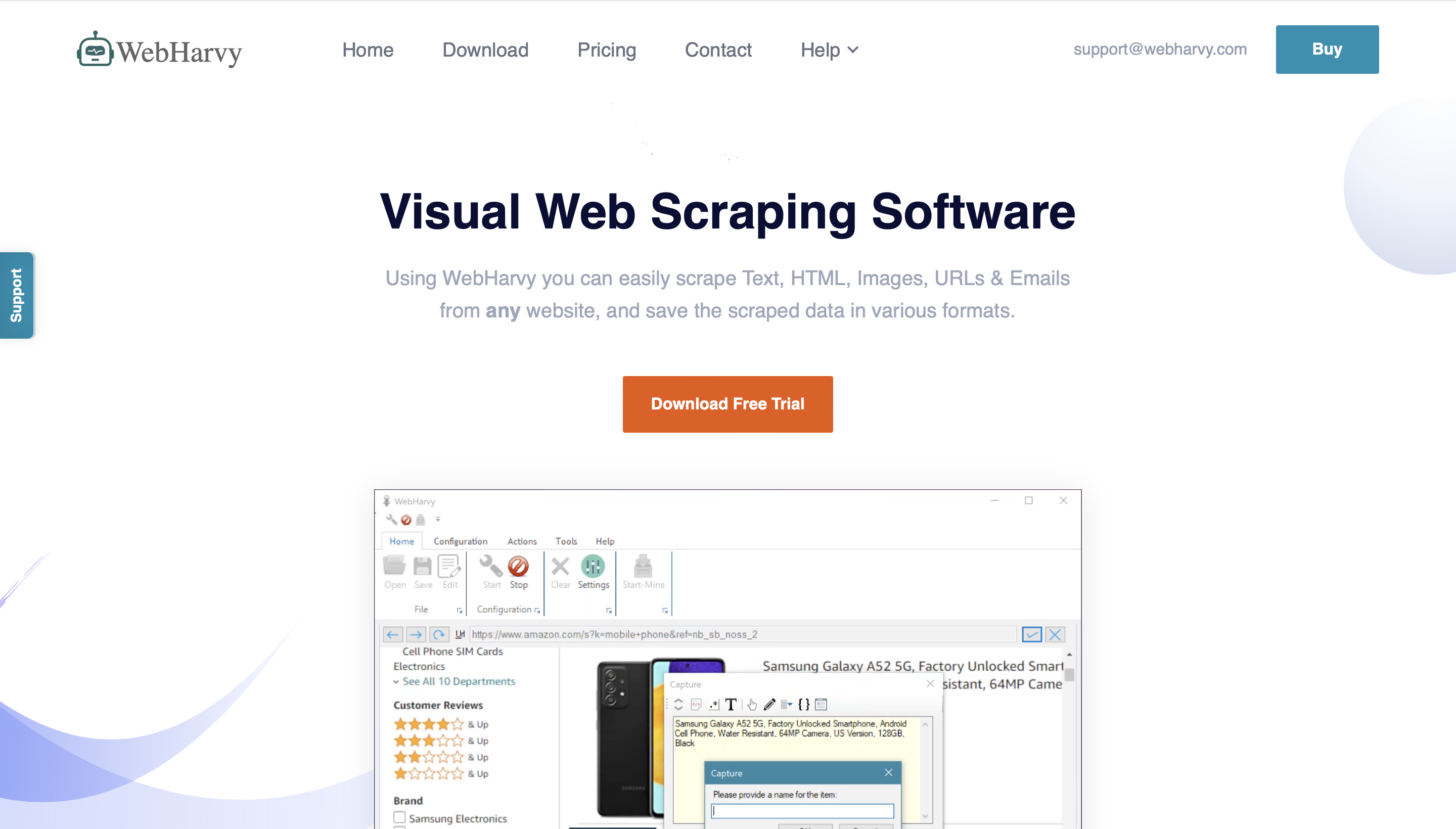

10. WebHarvy

WebHarvy delivers intuitive website data extraction through desktop application enabling immediate data collection from any website. The tool provides point-and-click interfaces for users new to extraction concepts.

WebHarvy's pattern recognition automatically identifies similar content across multiple pages, enabling efficient extraction from large websites without manual configuration for each individual page or data element.

User-friendly capabilities:

- Visual data selection through clicking desired elements

- Automatic pattern recognition for similar content

- Built-in scheduling for regular data collection

- Multiple export formats for immediate analysis

- Offline operation with complete local control

The desktop approach provides immediate functionality without cloud dependencies while maintaining sufficient sophistication for most business extraction requirements.

Best for: Small businesses requiring straightforward extraction, users preferring local control over data.

Pricing: One-time license fee of $139.

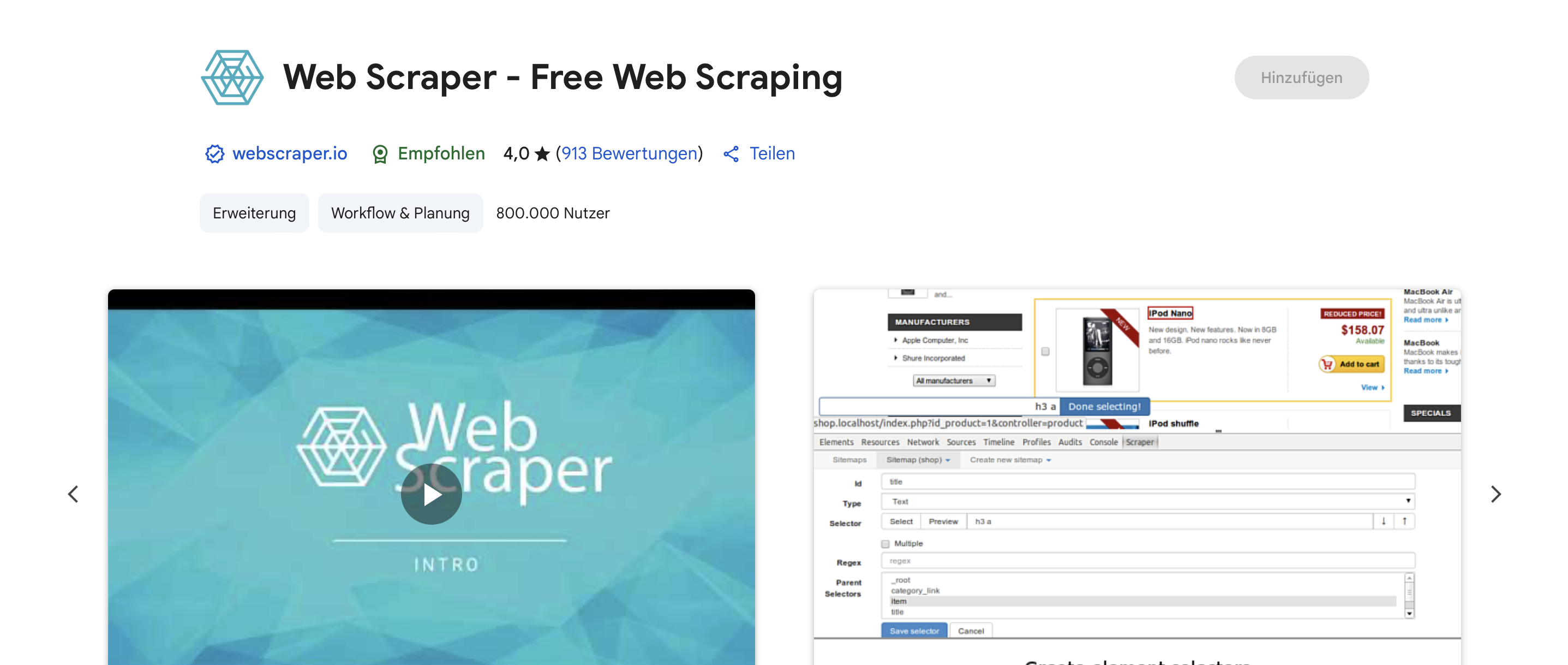

11. Web Scraper Chrome Extension

Web Scraper enables immediate website data extraction through browser extension that works on any visited website. The tool provides the most accessible entry point for users new to data extraction concepts.

Web Scraper's browser integration allows immediate extraction from any website through visual selection while automatically generating extraction configurations that can be reused and shared.

Accessibility features:

- Immediate extraction from any website through browser integration

- Point-and-click data selection with visual feedback

- Automatic configuration generation and sharing

- Cloud services for scheduled extraction operations

- CSV and JSON export for immediate analysis

The extension represents the lowest barrier to entry for website data extraction while providing sufficient capability for ad-hoc research and regular monitoring requirements.

Best for: Users new to data extraction, teams requiring immediate extraction capability without setup.

Pricing: Free extension with optional cloud services starting at $50/month.

Advanced Developer Solutions

Technical teams requiring maximum control and customization benefit from developer-focused extraction tools that provide comprehensive capabilities through programming interfaces and frameworks.

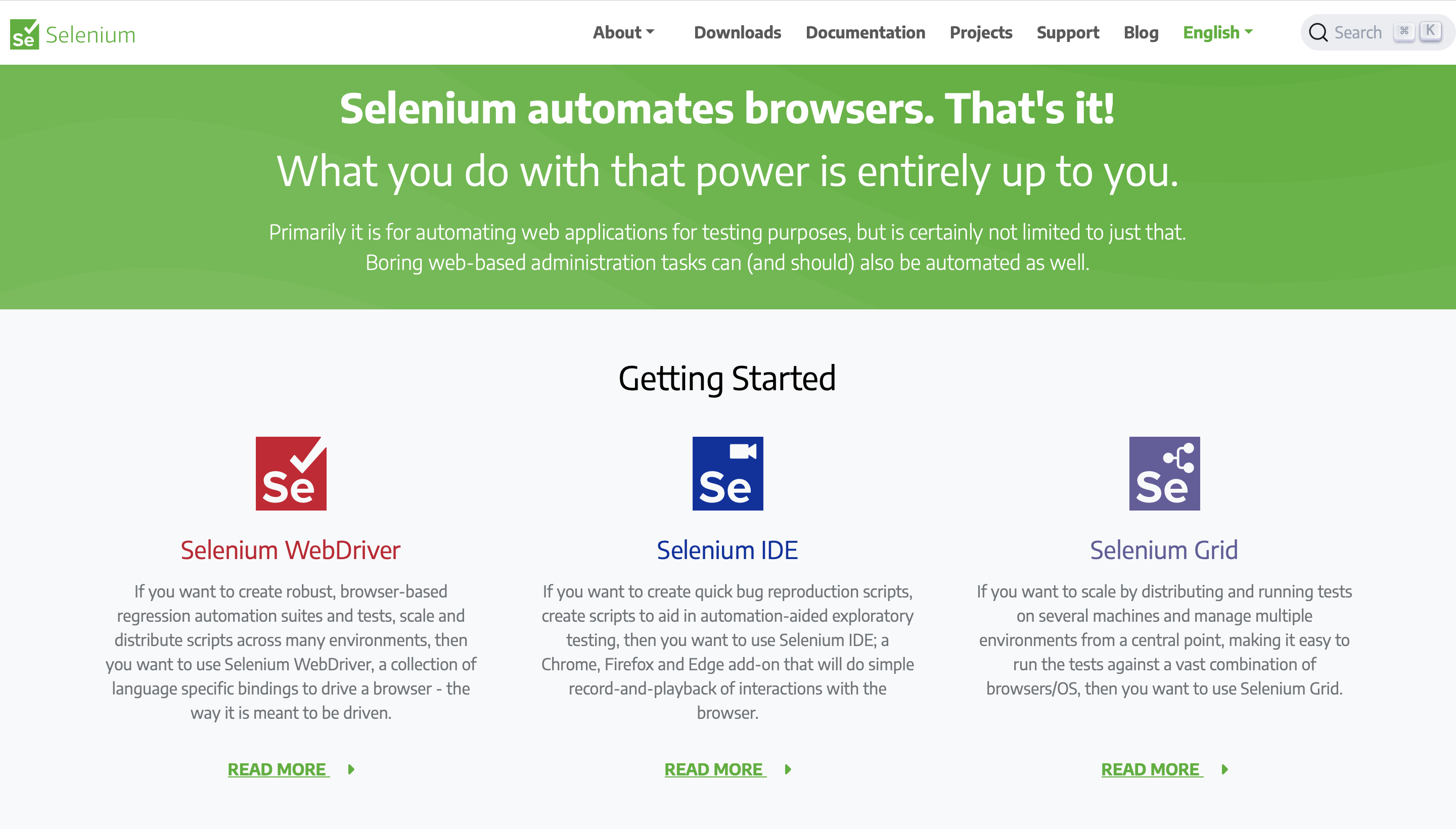

12. Selenium

Selenium enables sophisticated website data extraction through complete browser automation that handles the most complex web applications. The framework provides authentic browser environments for dynamic content extraction.

Selenium's browser automation replicates genuine user interactions including form completion, navigation, and authentication workflows that enable extraction from protected or complex web applications.

Advanced automation capabilities:

- Complete browser control for complex user interactions

- Support for multiple browsers ensuring broad compatibility

- Screenshot capture and element interaction monitoring

- Integration with multiple programming languages

- Comprehensive error handling for production reliability

The framework excels for extracting data from single-page applications, progressive web apps, and sites requiring complex authentication or user interaction sequences.

Best for: Developers requiring authentic browser simulation, complex web applications with sophisticated user interfaces.

Pricing: Free open-source framework with optional commercial support services.

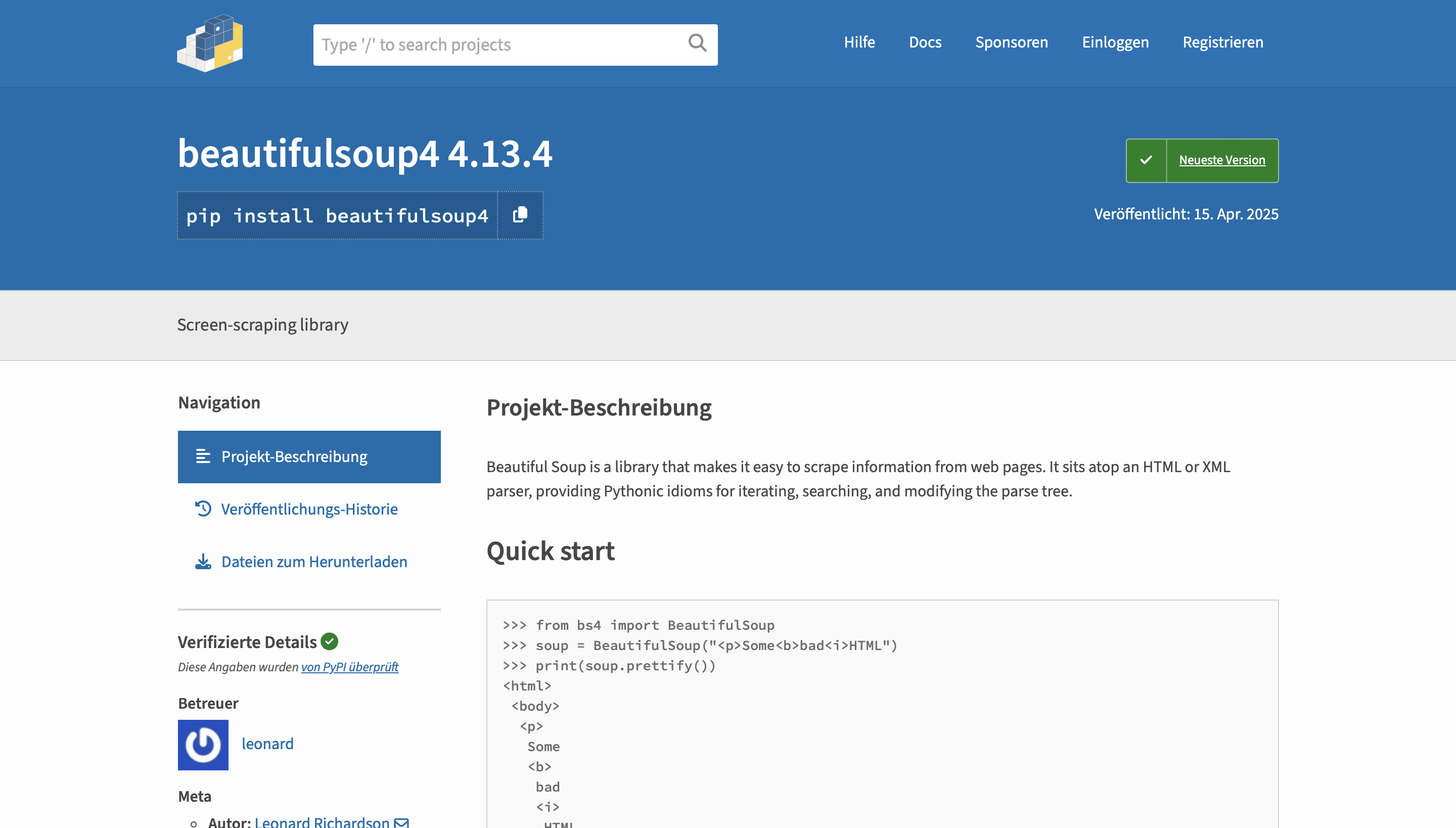

13. BeautifulSoup

BeautifulSoup provides fundamental website data extraction capabilities through Python library designed for HTML and XML parsing with precision control over content extraction processes.

BeautifulSoup's parsing capabilities handle malformed HTML gracefully while providing intuitive navigation methods for locating specific content within complex document structures.

Python integration benefits:

- Simple, readable syntax for extraction logic

- Graceful handling of malformed HTML and XML

- Integration with requests library for complete workflows

- Compatibility with data science and analysis libraries

- Extensive community documentation and examples

The library serves as foundation for custom extraction solutions while maintaining simplicity that makes it ideal for learning extraction concepts and building specialized tools.

Best for: Python developers requiring precise control, organizations building custom extraction solutions.

Pricing: Free open-source library.

14. Bright Data

Bright Data enables large-scale website data extraction through comprehensive proxy network and infrastructure services. The platform provides the foundation for enterprise extraction operations requiring global reach and anonymity.

Bright Data's proxy network includes residential, datacenter, and mobile IPs with global coverage and sophisticated rotation algorithms that maintain access reliability across diverse extraction scenarios.

Infrastructure capabilities:

- Global proxy network with residential IP coverage

- Automatic rotation and failure recovery systems

- Real-time monitoring and performance optimization

- Integration with popular extraction frameworks

- Comprehensive compliance and legal guidance

The platform addresses infrastructure challenges of large-scale extraction while providing the reliability and performance required for mission-critical data operations.

Best for: Organizations requiring large-scale extraction infrastructure, teams with existing extraction capabilities needing proxy services.

Pricing: Usage-based pricing starting at $500/month.

Specialized Industry Solutions

Certain extraction scenarios require specialized tools optimized for specific use cases, data types, or industry requirements that generic platforms cannot address effectively.

15. Diffbot

Diffbot revolutionizes website data extraction through artificial intelligence that understands web content semantically rather than structurally. The platform builds comprehensive knowledge graphs from extracted information automatically.

Diffbot's AI capabilities include automatic content classification and entity recognition that creates structured knowledge from unstructured web content while maintaining comprehensive relationship mapping.

Knowledge graph features:

- Semantic content understanding beyond HTML structure

- Automatic entity recognition and relationship mapping

- Continuously updated knowledge base with millions of entities

- Custom AI training for specific industry domains

- Comprehensive APIs for business application integration

The platform transforms web content into actionable business intelligence through semantic understanding rather than basic data extraction approaches.

Best for: Organizations requiring intelligent content understanding, teams needing semantic analysis capabilities.

Pricing: Custom enterprise pricing based on knowledge graph access.

> Start using Diffbot inside Databar today

16. Scrapfly

Scrapfly specializes in website data extraction that overcomes sophisticated anti-bot measures through advanced evasion techniques and residential proxy networks focused on maintaining high success rates.

Scrapfly's infrastructure includes browser fingerprinting and behavioral simulation that avoids detection while maintaining extraction efficiency against heavily protected websites.

Anti-detection capabilities:

- Advanced browser fingerprinting and behavioral simulation

- Residential proxy networks with intelligent rotation

- Automatic CAPTCHA solving and rate limit handling

- Custom header management and session persistence

- JavaScript execution in realistic browser environments

The platform excels for consistent access to heavily protected websites where traditional extraction methods encounter blocking or detection.

Best for: Organizations requiring access to protected websites, teams facing consistent blocking issues.

Pricing: Free tier available; paid plans start at $30/month.

17. ScrapeHero Cloud

ScrapeHero Cloud provides fully managed website data extraction through professional services that handle all technical aspects of data collection operations. The platform combines custom development with managed operations.

ScrapeHero's managed approach includes custom extraction development and ongoing maintenance with data delivery through preferred formats and schedules while handling all technical challenges.

Managed service benefits:

- Custom extraction development for specific requirements

- Professional maintenance and ongoing support

- Automated quality assurance and issue resolution

- Flexible data delivery through multiple formats

- Dedicated support teams for immediate assistance

The platform eliminates operational complexity while delivering professional results through experienced extraction specialists and proven methodologies.

Best for: Organizations requiring extraction without internal technical resources, teams needing professional management services.

Pricing: Custom project-based pricing.

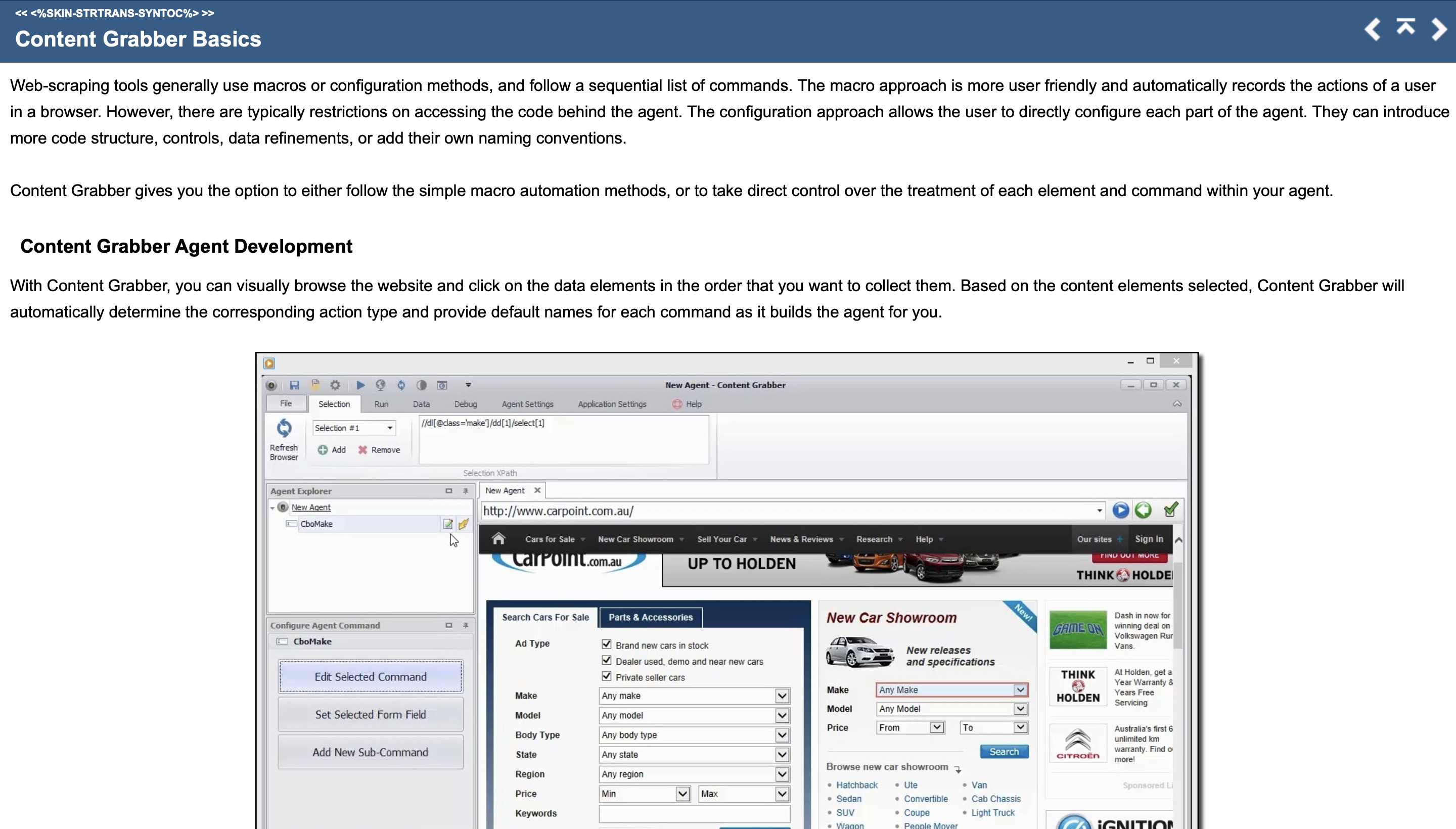

18. Content Grabber

Content Grabber offers professional-grade website data extraction through sophisticated desktop software designed for complex extraction requirements with extensive customization options.

Content Grabber's advanced features include custom script support and database integration with comprehensive error handling for robust extraction operations in demanding environments.

Professional capabilities:

- Visual interface combined with custom scripting options

- Database integration and automated delivery systems

- Command-line operation for enterprise automation

- Comprehensive logging and monitoring capabilities

- Advanced authentication and session management

The premium desktop approach delivers enterprise functionality through local deployment with complete control over extraction processes and data handling.

Best for: Organizations requiring sophisticated local extraction capabilities, teams needing extensive customization options.

Pricing: Professional licenses start at $495.

Simple API Solutions for Quick Integration

Developers requiring straightforward extraction capabilities benefit from API services that eliminate infrastructure complexity while providing reliable data collection through simple integration methods.

19. Zenscrape

Zenscrape provides straightforward website data extraction through REST API designed for developers requiring reliable content retrieval without managing browser automation or proxy infrastructure complexity.

Zenscrape's API includes JavaScript rendering and proxy rotation through simple parameter configuration that enables developers to integrate extraction capabilities without extensive technical overhead.

API integration features:

- Simple REST API with comprehensive documentation

- JavaScript rendering and basic anti-bot evasion

- Multiple response formats including HTML and JSON

- Screenshot capture alongside content extraction

- Reliable infrastructure with performance monitoring

The service simplifies extraction integration while maintaining professional functionality sufficient for most business applications and development requirements.

Best for: Developers requiring simple extraction APIs, small teams needing reliable basic capabilities.

Pricing: Free tier available; paid plans start at $29/month.

20. ScrapOwl

ScrapOwl offers cost-effective website data extraction through API service designed for budget-conscious organizations and individual developers requiring essential extraction capabilities.

ScrapOwl maintains competitive pricing while delivering reliable basic functionality including JavaScript rendering and proxy rotation sufficient for common extraction scenarios.

Budget-focused features:

- Affordable pricing with essential extraction capabilities

- Basic JavaScript rendering and proxy rotation

- Simple API integration with standard documentation

- Reasonable success rates for most common websites

- Transparent pricing without hidden fees or complexity

The platform prioritizes affordability while maintaining functional extraction services for organizations with limited budgets or simple requirements.

Best for: Budget-conscious organizations, individual developers requiring basic extraction capabilities.

Pricing: Free tier available; paid plans start at $9/month.

Choosing the Right Extraction Solution

Selecting effective website data extraction tools requires matching platform capabilities with specific business requirements, technical resources, and long-term strategic objectives rather than simply comparing features.

Assess Your Technical Requirements

Start by evaluating your team's technical capabilities and resources. Non-technical teams benefit most from AI-powered platforms like Databar.ai or visual tools like Octoparse that eliminate programming requirements. Technical teams can leverage powerful frameworks like Scrapy or Selenium for maximum customization.

Consider the complexity of your target websites. Simple content sites work with basic tools, while JavaScript-heavy applications require browser automation. Protected sites need specialized anti-detection capabilities. Multiple source monitoring demands scalable infrastructure.

Define Your Data Integration Needs

Determine how extracted data integrates with existing business systems. Some platforms provide direct CRM integration, while others require custom development. API access enables automated workflows, but increases technical requirements.

Plan for data quality and validation requirements. Advanced web scraping tools include built-in validation, while simpler solutions may require additional quality control processes.

Budget for Total Cost of Ownership

Consider all costs including licensing, setup, maintenance, and ongoing management. Free tools often require significant technical investment. Managed services eliminate internal overhead but increase operational costs. Calculate ROI based on time savings and competitive advantages.

Plan for scaling requirements as data needs grow. Simple solutions may become inadequate, requiring migration to more sophisticated platforms. Enterprise tools provide growth runway but may be overkill initially.

Implementation Best Practices

Successful website data extraction requires strategic planning, proper configuration, and ongoing maintenance to achieve reliable results while minimizing technical and operational challenges.

Success requires matching extraction capabilities with business requirements rather than pursuing technical sophistication for its own sake. The most effective implementations focus on delivering actionable intelligence rather than collecting raw data.

Whether you need industry-specific prospecting data or comprehensive market intelligence, the right extraction platform transforms web content from scattered information into competitive advantage.

FAQ

What are the best website data extraction tools for beginners? Beginners achieve best results with user-friendly platforms like Databar.ai for AI agents, Octoparse for visual workflows, or Web Scraper Chrome extension for immediate access. These tools provide point-and-click interfaces while delivering professional results without programming requirements.

How much do professional website data extraction tools cost? Professional extraction tools range from $30-75/month for basic platforms to $500+/month for enterprise solutions. Consider total costs including setup, maintenance, and integration when evaluating options. Most organizations see positive ROI within 3-6 months through time savings and improved decision-making.

Which extraction tools work best for non-technical teams? Non-technical teams benefit most from platforms like Databar.ai that understand natural language queries and let you extract info from any publicly available website based on a simply prompt.

How can businesses ensure data quality when extracting from websites? Implement automated validation rules checking data completeness and accuracy, use multiple extraction methods for verification, and establish monitoring systems detecting changes or errors. Regular quality audits and anomaly detection maintain consistent standards throughout extraction operations.

Related articles

People Search API: Build Better Lead Lists Without Manually Scanning LinkedIn

Save Time and Improve Accuracy by Automating Prospect Searches with People Search APIs

by Jan, February 15, 2026

Job Change Signals: Catch Warm Leads Before Your Competitors

Catch Key Decision Makers Early by Tracking Job Moves Before Your Competition Does

by Jan, February 14, 2026

Buyer Intent Data: Identify and Engage Hot Prospects Automatically

Discover how buyer intent data helps you find and connect with prospects showing real interest

by Jan, February 14, 2026

LinkedIn Thought Leadership Content Systems (And How to Reach Post Engagers)

How to convert your LinkedIn post interactions into meaningful sales opportunities

by Jan, February 13, 2026