Top 10 Web Scraping Tools That Actually Deliver Results in 2025

Best Web Scraping Solutions for Accurate and Efficient Data Extraction 2025

Blogby JanJune 03, 2025

The web scraping tools market has changed dramatically. Modern businesses extract billions of data points daily, yet many data teams report struggling with tool selection. The difference between success and failure often comes down to choosing the right platform for their specific needs.

Traditional approaches to web data extraction relied on manual copying or basic scripts. Today's web scraping tools handle JavaScript-heavy sites, bypass anti-bot systems, and process millions of pages automatically. The shift has been profound—teams using modern scraping platforms benefit from much faster data collection and fewer extraction failures compared to manual methods.

|

Tool |

Best For |

Starting Price |

Key Strength |

|

Databar.ai |

Data intelligence & enrichment |

$39/month |

90+ data providers integration |

|

Scrapy |

Python developers |

Free |

Open-source flexibility |

|

Beautiful Soup |

Quick Python scripts |

Free |

Simple learning curve |

|

Puppeteer |

JavaScript automation |

Free |

Chrome DevTools protocol |

|

Selenium |

Cross-browser testing |

Free |

Developer-friendly |

|

Octoparse |

Visual workflow creation |

$75/month |

No-code interface |

|

ParseHub |

AI-powered extraction |

Free tier |

Machine learning adaptation |

|

Apify |

Pre-built scrapers |

$49/month |

Marketplace ecosystem |

|

ScrapingBee |

API integration |

$49/month |

Developer-friendly |

|

Bright Data |

Enterprise operations |

Custom |

Global proxy network |

What Makes a Great Web Scraping Tool in 2025?

Selecting web scraping tools requires understanding what separates professional platforms from basic utilities. The best solutions share several critical characteristics that enable reliable, scalable data extraction.

Data accuracy and reliability form the foundation. Professional tools maintain 95%+ extraction accuracy through intelligent content recognition and automatic adaptation to website changes. When a site updates its structure, advanced platforms adjust automatically rather than breaking completely.

Scalability and performance determine long-term viability. Whether extracting 100 or 1 million pages, the platform should handle volume increases without proportional complexity growth. Cloud infrastructure and distributed processing enable this scalability.

Anti-bot protection handling is becoming more and more important as websites implement sophisticated detection systems. Leading tools rotate proxies intelligently, mimic human behavior patterns, and handle CAPTCHAs automatically. Without these capabilities, even simple extraction projects fail.

Integration capabilities connect extracted data with business workflows. API access, webhook support, and native integrations with data platforms transform raw extraction into actionable intelligence. The best tools fit seamlessly into existing tech stacks.

Top 10 Web Scraping Tools: Detailed Analysis

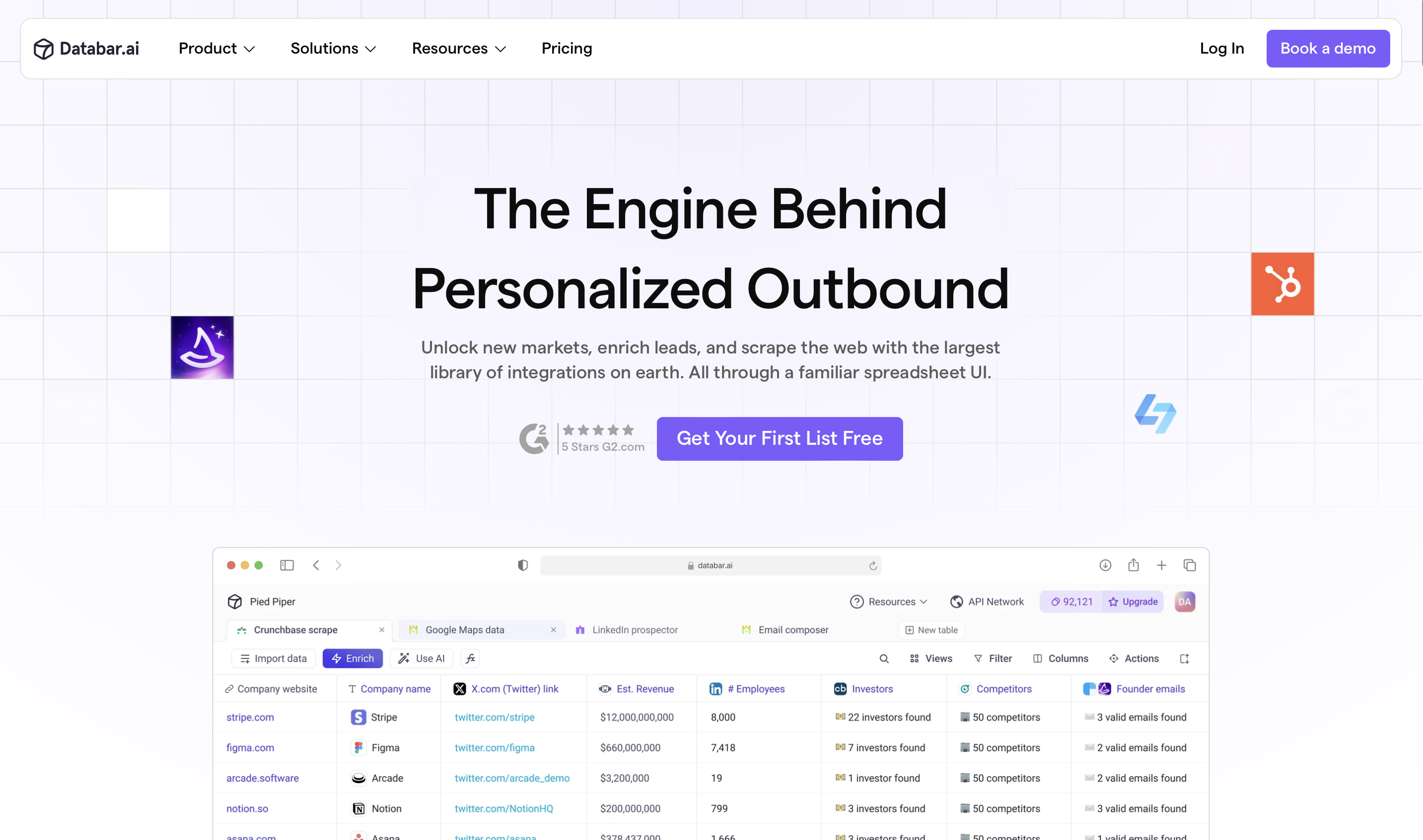

1. Databar.ai - Beyond Traditional Scraping

Databar.ai goes beyond basic web scraping by not only extracting data but also enriching it. Unlike platforms that simply pull HTML content, Databar.ai creates complete business intelligence from web data.

The platform's unique approach integrates web scraping with access to 90+ premium data providers. Extract a company's basic information from their website, and Databar.ai automatically enriches it with contact details, funding data, technology insights, and competitive intelligence. This creates actionable prospect profiles rather than raw data dumps.

Key capabilities that set Databar.ai apart:

Chrome extension functionality provides familiar browser-based workflows. Extract data while browsing, then enhance it with data from 90+ sources without platform switching.

The AI research agent understands context beyond HTML parsing. Ask natural language questions like "find all case studies mentioning ROI improvements" and receive structured, analyzed results. The agent visits websites, comprehends content relationships, and compiles insights automatically.

Transparent usage-based pricing starting at $39/month makes enterprise-grade capabilities accessible. No hidden fees or complex credit systems - just predictable costs that scale with usage.

Best for: Teams requiring in-depth data intelligence beyond basic extraction, especially for sales prospecting, market research, and competitive analysis. Start your free Databar.ai trial today

2. Scrapy

Scrapy remains the gold standard for Python developers building custom web scraping tools. This open-source framework provides complete control over extraction logic while handling complex technical challenges.

The framework excels through its asynchronous architecture, processing multiple requests simultaneously for maximum efficiency. Built-in middleware handles cookies, headers, and request throttling automatically. Extensive documentation and active community support accelerate development.

Scrapy's pipeline architecture enables sophisticated data processing workflows. Clean, validate, and store extracted data through customizable components. Integration with databases, cloud storage, and data processing frameworks happens naturally through Python's ecosystem.

Best for: Development teams building custom extraction solutions with specific requirements and full control needs.

3. Beautiful Soup

Beautiful Soup offers the gentlest introduction to programmatic web scraping. This Python library transforms HTML parsing from complex programming into intuitive operations that beginners can master quickly.

The library's strength lies in its forgiving parser that handles malformed HTML gracefully. Navigate document structures through simple, readable code. Extract specific elements using CSS selectors or tree traversal methods that make sense intuitively.

While lacking advanced features like JavaScript rendering or concurrent processing, Beautiful Soup excels for straightforward extraction tasks. Parse static HTML, extract specific data points, and integrate with other Python tools seamlessly.

Best for: Python beginners, data scientists needing quick extraction scripts, and projects with simple HTML parsing requirements.

4. Puppeteer

Puppeteer brings Google Chrome's power to automated web scraping through the Chrome DevTools Protocol. This Node.js library controls headless Chrome instances, enabling extraction from JavaScript-heavy sites that defeat simpler tools.

The library handles modern web complexities automatically. Wait for dynamic content loading, interact with page elements, capture screenshots, and generate PDFs. Puppeteer navigates single-page applications and AJAX-loaded content naturally.

Direct Chrome control enables sophisticated automation scenarios. Fill forms, click buttons, scroll pages, and trigger JavaScript events programmatically. Extract data after complex interactions that mirror real user behavior.

Best for: JavaScript developers working with dynamic websites, single-page applications, and scenarios requiring browser automation.

5. Selenium

Selenium evolved from testing tool to versatile web automation platform. Support for multiple browsers and programming languages makes it uniquely flexible among web scraping tools.

The WebDriver protocol enables consistent automation across Chrome, Firefox, Safari, and Edge. Write extraction logic once and run it across different browsers to ensure compatibility and avoid detection. Multiple language bindings support Java, Python, C#, Ruby, and JavaScript developers.

Selenium Grid distributes extraction across multiple machines for parallel processing. Scale extraction projects by adding nodes rather than rewriting code. Built-in wait conditions handle dynamic content loading intelligently.

Best for: Teams requiring cross-browser compatibility, existing Selenium expertise, and projects needing distributed extraction.

6. Octoparse

Octoparse democratizes web scraping through intuitive visual workflows. Business users create sophisticated extraction logic without writing code, while the platform handles technical complexities invisibly.

The point-and-click interface identifies data patterns automatically. Select elements visually and Octoparse generates extraction rules. Handle pagination, infinite scrolling, and dropdown menus through visual configuration. Pre-built templates for popular sites accelerate common extraction tasks.

Cloud infrastructure processes extractions without local resource constraints. Schedule recurring extractions, receive notifications on completion, and access data through web interfaces or API endpoints. Team collaboration features enable shared workflow management.

Best for: Non-technical users, teams prioritizing visual interfaces, and organizations needing scheduled extraction workflows.

7. ParseHub

ParseHub applies machine learning to solve web scraping's maintenance challenge. Websites change constantly, breaking traditional extractors. ParseHub's algorithms adapt automatically, maintaining extraction reliability despite layout modifications.

The visual interface combines ease of use with intelligent capabilities. Train the system by selecting data examples, and machine learning generalizes patterns across similar elements. Handle complex scenarios like nested data, conditional logic, and multi-page relationships visually.

API access bridges visual configuration with programmatic integration. Trigger extractions, retrieve results, and manage projects through comprehensive endpoints. Desktop and cloud deployment options accommodate different security requirements.

Best for: Projects where website changes frequently, teams wanting AI-powered reliability, and visual interface preferences.

8. Apify

Apify revolutionized web scraping through its marketplace model. Instead of building from scratch, access hundreds of pre-built scrapers for popular websites. Community-maintained extractors handle platform-specific challenges professionally.

The Actor marketplace includes scrapers for social media platforms, e-commerce sites, search engines, and business directories. Each Actor receives regular updates to handle platform changes. When custom requirements arise, build Actors using Puppeteer or Playwright within Apify's infrastructure.

Platform features handle scaling, scheduling, and data storage automatically. Proxy rotation, browser fingerprinting, and request queuing happen transparently. Webhook notifications and API access integrate results with business applications.

Best for: Teams needing quick solutions for popular platforms, projects requiring proven extractors, and scenarios benefiting from community maintenance.

9. ScrapingBee

ScrapingBee simplifies web scraping to API calls. Send URLs, receive extracted data. This approach eliminates infrastructure management while providing enterprise-grade extraction capabilities.

The API handles JavaScript rendering through headless browsers automatically. Rotate through residential proxies to avoid blocking. Process CAPTCHAs and anti-bot challenges transparently. Custom JavaScript execution enables interaction with dynamic elements.

Comprehensive SDK support accelerates integration. Libraries for Python, Node.js, Ruby, PHP, and other languages provide native interfaces. Detailed documentation and code examples reduce implementation time significantly.

Best for: Developers prioritizing API integration, projects requiring minimal infrastructure, and teams valuing simplicity over customization.

10. Bright Data

Bright Data (formerly Luminati) provides web scraping infrastructure for large-scale operations. The platform's proxy network spans millions of IPs globally, enabling extraction from any geographic location without restrictions.

The four-layer proxy network includes datacenter, residential, mobile, and ISP proxies. Intelligent rotation and session management maintain extraction reliability. Compliance frameworks ensure ethical data collection within legal boundaries.

Web Unlocker technology bypasses sophisticated anti-bot systems automatically. Handle CAPTCHAs, browser fingerprinting, and behavioral analysis without manual configuration. Professional services support complex extraction requirements through dedicated teams.

Best for: Enterprise organizations, high-volume extraction projects, and scenarios requiring global proxy infrastructure.

Choosing Your Web Scraping Tool: Decision Framework

Selecting among web scraping tools requires matching capabilities to specific requirements. Different projects demand different strengths.

For comprehensive data intelligence: Choose Databar.ai when extraction represents just one component of broader data needs. The platform's integration with 90+ data providers transforms basic web data into complete business insights, eliminating the need for multiple tools and manual enrichment processes.

For development flexibility: Open-source options like Scrapy or Beautiful Soup provide complete control. Build custom solutions tailored to unique requirements. Invest development time for long-term flexibility.

For visual workflows: Octoparse and ParseHub enable sophisticated extraction without programming. Business users create and manage extraction workflows independently. Trade some flexibility for accessibility.

For JavaScript-heavy sites: Puppeteer and Selenium handle dynamic content naturally. Render pages completely before extraction. Accept increased resource usage for compatibility.

For quick implementation: Apify's marketplace and ScrapingBee's API approach minimize setup time. Access proven solutions immediately. Focus on using data rather than extracting it.

For enterprise scale: Bright Data provides infrastructure for massive extraction projects. Global proxy networks and professional support handle complex requirements. Expect significant costs for premium capabilities.

Implementation Best Practices

Successful web scraping extends beyond tool selection to implementation approach. Follow these practices for optimal results:

Start with clear data requirements. Define exactly what information you need, from which sources, at what frequency. Vague objectives lead to overengineered solutions and wasted resources.

Monitor and maintain actively. Set up alerts for extraction failures, data quality issues, and performance degradation. Regular maintenance prevents small issues from becoming major failures.

Plan for scale from the start. Design data storage, processing pipelines, and infrastructure to handle 10x current volumes. Growing data needs shouldn't require complete system rebuilds.

Looking for More Insights?

Professional web scraping requires structured data extraction capabilities that go beyond basic crawling tools. For teams researching web scraping solutions, understanding how our platform compares to Phantombuster reveals why structured business intelligence approaches deliver far more comprehensive results than social media automation tools. When evaluating browser-based options, Databar.ai's capabilities vs Instant Data Scraper showcases how enterprise scraping capabilities surpass browser extension limitations.

Why Choose Databar.ai?

While traditional web scraping tools focus solely on extraction, modern data teams need more. They need context, enrichment, and actionable intelligence. Databar.ai addresses this gap by being the only platform that combines web scraping with comprehensive data enrichment, delivering complete business profiles rather than raw data points.

This integrated approach eliminates the need for multiple tools, reduces manual work by up to 80%, and provides the business context that drives better decisions. Start your free Databar.ai trial today and discover how combining web scraping with 90+ data providers turns raw data into actionable business insights.

FAQs About Web Scraping Tools

What's the difference between web scraping tools and manual data collection?

Web scraping tools automate data extraction through programmatic methods, processing thousands of pages in minutes. Manual collection involves copying data by hand - a process that's error-prone, time-consuming, and impossible to scale. Modern scraping tools handle dynamic content, maintain consistency, and integrate with data pipelines automatically.

Do I need programming skills to use web scraping tools?

Not necessarily. Visual tools like Octoparse, ParseHub, and Databar.ai enable sophisticated extraction through point-and-click interfaces. However, programming knowledge expands possibilities and enables custom solutions. Choose tools matching your technical comfort level.

What data formats do web scraping tools typically support?

Common export formats include CSV for spreadsheet compatibility, JSON for programmatic processing, Excel files for business users, and direct database connections for automated pipelines. Most professional tools support multiple formats and custom transformations.

What's the typical cost range for web scraping tools?

Pricing varies dramatically based on capabilities and scale. Free open-source options like Scrapy require only development time. Visual tools start around $75-99/month. API services begin at $49/month for basic tiers. Enterprise platforms with global infrastructure cost thousands monthly. Databar.ai offers professional capabilities from $39/month with transparent usage-based pricing.

Related articles

People Search API: Build Better Lead Lists Without Manually Scanning LinkedIn

Save Time and Improve Accuracy by Automating Prospect Searches with People Search APIs

by Jan, February 15, 2026

Job Change Signals: Catch Warm Leads Before Your Competitors

Catch Key Decision Makers Early by Tracking Job Moves Before Your Competition Does

by Jan, February 14, 2026

Buyer Intent Data: Identify and Engage Hot Prospects Automatically

Discover how buyer intent data helps you find and connect with prospects showing real interest

by Jan, February 14, 2026

LinkedIn Thought Leadership Content Systems (And How to Reach Post Engagers)

How to convert your LinkedIn post interactions into meaningful sales opportunities

by Jan, February 13, 2026