How to Assess and Validate Cold Email Offers

Fix your offer first, then send the emails that convert

Blogby JanAugust 15, 2025

Your cold emails are getting opened.

But nobody's responding.

You've spent weeks crafting the perfect subject lines, researching prospects, and personalizing every message. Open rates look decent at 44%. But when it comes to actual replies and conversions? Crickets.

Here's what most sales teams don't realize: your offer determines your campaign's success. Knowing how to assess and validate cold-email offers is the difference between messages that get ignored and messages that book meetings. Yet most cold emails never get a response because the offers inside don't resonate with prospects.

The brutal truth? Your offer validation process is probably broken.

Most companies treat offer validation like guesswork. They write what sounds good to them, send it to their list, and hope for the best. When response rates stay below 1%, they blame the subject line or the timing.

But here's what separates winning sales teams from everyone else: they test and validate their offers before sending them to thousands of prospects. They understand that validating cold email offers isn't about perfecting your pitch - it's about proving your value proposition actually matters to your target market.

An excellent offer with bad email copy will outperform a perfectly drafted email every time.

The $50,000 Mistake Your Sales Team Is Making Right Now

Let me describe a scenario you've probably lived through.

Your team spends days researching prospects, crafting personalized openers, and writing compelling subject lines. The first batch of emails goes out with your core value proposition - the one that sounds amazing in sales meetings and looks great on your website.

Open rates hit 40-50%. Click rates look decent. But response rates? Under 1%.

What went wrong?

Your offer doesn't solve a problem your prospects actually care about.

Most sales teams confuse features with benefits, solutions with problems, and what they want to sell with what prospects want to buy. They write offers that sound impressive but don't address real pain points.

The data tells the story: Companies that validate their offers before sending cold emails see significantly higher response rates, compared to the industry average of 1-2%. But most teams skip validation entirely because they assume their offer is already compelling.

Here's the uncomfortable reality: if your cold email offers aren't getting responses, the problem isn't your writing, timing, or personalization. It's that your fundamental value proposition doesn't resonate with your target market.

Poor offer validation creates a vicious cycle. Low response rates lead to more aggressive follow-up sequences. More follow-ups lead to spam complaints and damaged sender reputation. Damaged reputation leads to lower deliverability and even worse results.

The solution isn't sending more emails or better personalization. It's validating that your offer actually matters to prospects before you scale your outreach.

Your Offer Probably Sucks (Here's How to Know for Sure)

Most sales guides focus on tactics - better subject lines, perfect timing, and clever personalization. But offer validation addresses the fundamental question: does your value proposition actually solve problems prospects care about?

Real offer validation means testing whether your core message creates genuine interest before you invest in large-scale outreach campaigns.

The best teams don't assume their offers work. They prove it through systematic testing with small prospect groups before scaling successful approaches.

This isn't about A/B testing subject lines or email templates. It's about validating that your fundamental value proposition resonates with your target market before you commit significant resources to outreach.

Effective offer validation involves three core elements:

First, you need message-market fit - confirmation that your offer addresses problems your prospects actually experience and care about solving. Second, you need timing validation - proof that prospects are ready to consider solutions now rather than someday. Third, you need competitive differentiation - evidence that your approach provides unique value compared to alternatives.

The goal is to create offers that prospects can't ignore because they address urgent, expensive problems with compelling solutions.

Why Your Current Validation Process Might Be Completely Broken

Unvalidated offers waste more than just time and email credits. They damage relationships, hurt sender reputation, and create opportunity costs that compound over time.

Revenue Leakage from Poor Offers

When offers don't resonate, even perfect execution can't save your campaigns. You might achieve excellent open rates and decent click rates, but conversion rates stay flat because the fundamental value proposition doesn't create urgency or interest.

Opportunity cost represents the biggest hidden expense. Every email sent with an unvalidated offer is a missed chance to connect with prospects using messaging that actually works. When you're competing for attention in crowded inboxes, weak offers ensure you lose to competitors with stronger value propositions.

List burnout occurs when you exhaust prospect tolerance with irrelevant offers. Once prospects mark your emails as spam or mentally categorize you as irrelevant, recovering that relationship becomes extremely difficult.

Deliverability Damage from Low Engagement

Poor engagement metrics from unvalidated offers hurt your sender reputation across all email campaigns, not just cold outreach.

When prospects don't engage with your emails - no opens, clicks, or responses - email service providers interpret this as evidence that your content isn't valuable. This affects deliverability for all future campaigns, creating a downward spiral that's difficult to reverse.

Spam complaints increase when offers feel irrelevant or pushy. Even small increases in spam complaint rates can trigger deliverability issues that affect your entire email infrastructure.

Team Morale and Resource Allocation

Failed campaigns create organizational costs beyond immediate results. Sales teams lose confidence in outreach strategies. Marketing teams question campaign effectiveness. Leadership questions resource allocation for demand generation activities.

When offers consistently fail to generate responses, teams often blame execution rather than strategy. This leads to endless tweaking of tactics rather than addressing fundamental offer problems.

Resource misallocation occurs when teams spend time optimizing campaigns with poor offers rather than validating and improving their core value propositions.

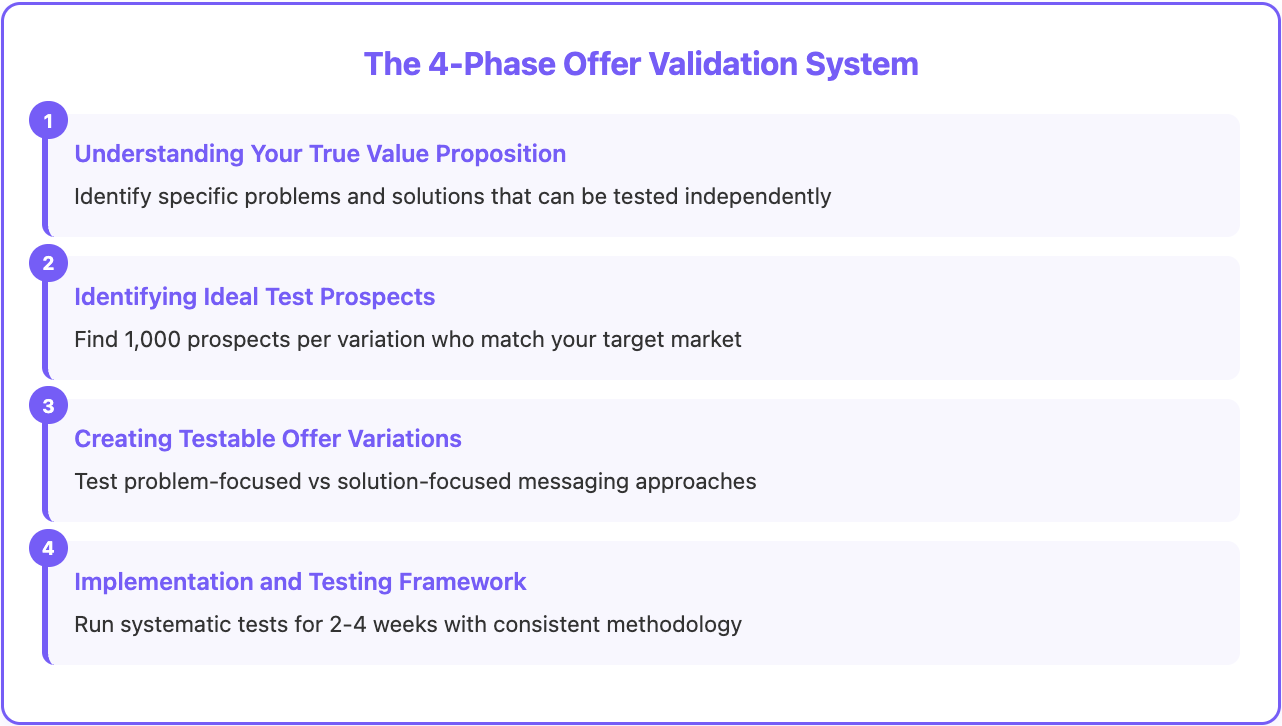

The System To Double Your Response Rates

Successful offer validation requires systematic testing rather than intuition or internal opinions about what prospects want.

Phase 1: Understanding Your True Value Proposition

Most companies think they understand their value proposition, but their assumptions don't match market reality.

Effective validation starts with deconstructing your broader value proposition into specific, testable offers. Instead of promoting everything your product does, focus on individual problems and solutions that can be validated independently.

For example, instead of messaging "our platform increases output," test specific offers like "reduce prospect research time by 75%" or "automate follow-up sequences that book 30% more meetings."

Problem identification requires understanding not just what your product does, but which problems your prospects consider urgent and expensive enough to solve immediately.

The best approach involves analyzing closed-won deals to identify patterns in what actually convinced customers to buy. What specific problems were they trying to solve? What alternatives did they consider? What made your solution compelling compared to doing nothing?

Value quantification helps create offers that prospects can't ignore. Instead of vague benefits like "increased efficiency," provide specific outcomes like "save 10 hours weekly on manual research" or "reduce sales cycle length by 30 days."

Phase 2: Identifying Ideal Test Prospects

Offer validation requires testing with prospects who represent your actual target market, not convenient contacts who might respond regardless of offer quality.

Ideal test prospects share characteristics with your best customers but haven't been exposed to your previous outreach efforts. They should represent the decision-makers, budget holders, and influencers involved in actual purchase decisions.

Sample size matters for meaningful validation. Testing offers with 5-10 prospects provides anecdotal feedback. Testing with 50-100 prospects per offer variation provides statistically meaningful insights.

Segmentation enables more precise validation. Different prospect segments might respond to different value propositions. Testing offers across multiple segments helps identify which messages resonate with which audiences.

For example, IT decision-makers might care about security and integration capabilities, while finance teams focus on ROI and cost savings. Validating offers separately for each stakeholder type provides more actionable insights.

Phase 3: Creating Testable Offer Variations

Effective testing requires creating offer variations that test specific hypotheses about what prospects value most.

Instead of testing random changes, develop variations that test different problem/solution combinations, value propositions, or competitive differentiators.

Hypothesis-driven testing might include:

Comparing problem-focused offers ("struggling with manual data entry?") versus solution-focused offers ("automate your data entry process"). Testing immediate value ("see results in 30 days") versus long-term benefits ("transform your entire sales process"). Evaluating feature-based messaging ("our platform includes advanced analytics") versus outcome-based messaging ("identify your highest-value prospects instantly").

Message structure should remain consistent across variations to isolate the impact of offer changes rather than writing quality or formatting differences.

Each offer variation should be clear, specific, and actionable enough that prospects understand exactly what you're proposing and why it matters to them.

Phase 4: Implementation and Testing Framework

Systematic testing requires consistent methodology across all offer variations to ensure reliable results.

Testing protocol should include identical outreach timing, prospect research depth, and follow-up sequences for all variations. The only variable should be the offer itself.

Sample distribution ensures each offer variation reaches similar prospect profiles. Random assignment helps eliminate bias from prospect quality or market timing differences.

Response tracking goes beyond simple reply rates to understand response quality and conversion potential. Positive responses that lead to meetings matter more than total response volume.

Timeline considerations affect validation accuracy. Testing during busy periods (end of quarter, holiday seasons) or slow periods might skew results compared to normal business cycles.

Most teams need 2-4 weeks to gather meaningful validation data, depending on prospect volume and response rates.

How To Fix Your Biggest Offer Problem

Traditional offer validation requires extensive manual research, complex testing setups, and time-consuming analysis of results across multiple prospect segments.

Databar simplifies this entire process by providing the data foundation and automation capabilities that make offer validation efficient and actionable.

Complete Prospect Intelligence for Better Validation

Accurate validation requires testing offers with prospects who truly represent your target market, but most teams lack comprehensive data about prospect characteristics and context.

Our automated data enrichment provides the foundation for precise validation testing:

Company intelligence including industry, size, growth stage, technology stack, and recent news helps identify prospects who match your ideal customer profile for meaningful validation results.

Individual stakeholder data covering job titles, responsibilities, recent changes, and professional background enables stakeholder-specific offer testing that reflects real buying scenarios.

Behavioral signals including website activity, content engagement, and buying intent indicators help prioritize prospects most likely to provide meaningful validation feedback.

Technology insights about current tools, integration needs, and technical capabilities inform offer positioning that addresses real competitive and implementation considerations.

This detailed data enables validation testing with prospects who actually represent your target market rather than convenient contacts who might respond regardless of offer quality.

AI-Powered Offer Personalization and Testing

Databar's AI capabilities help create and test offer variations that reflect genuine prospect context rather than generic value propositions.

Dynamic offer generation creates personalized value propositions based on prospect company data, industry challenges, and technology environment. This enables testing multiple offer approaches simultaneously without manually crafting each variation.

Contextual messaging references specific business situations, recent company developments, or industry trends that make offers feel relevant and timely rather than generic sales pitches.

For example, when validating offers for SaaS companies experiencing rapid growth, Databar can automatically generate variations that reference specific growth challenges, technology integration needs, and scaling concerns relevant to each prospect's situation.

The Metrics That Actually Matter (Spoiler: It's Not Open Rates)

Most teams measure offer validation success using simple response rates, but effective validation requires understanding response quality and conversion potential.

Response Quality Analysis

Meaningful validation distinguishes between responses that indicate genuine interest and polite rejections that don't provide actionable insights.

Positive response indicators include meeting requests, questions about implementation, requests for additional information, or forwarding emails to colleagues. These behaviors suggest genuine consideration rather than courtesy responses.

Engagement progression tracks whether initial responses lead to continued conversation, content downloads, or sales process advancement. Offers that generate sustained engagement predict better campaign performance than those producing one-off replies.

The 7 Validation Mistakes That Cost $$$

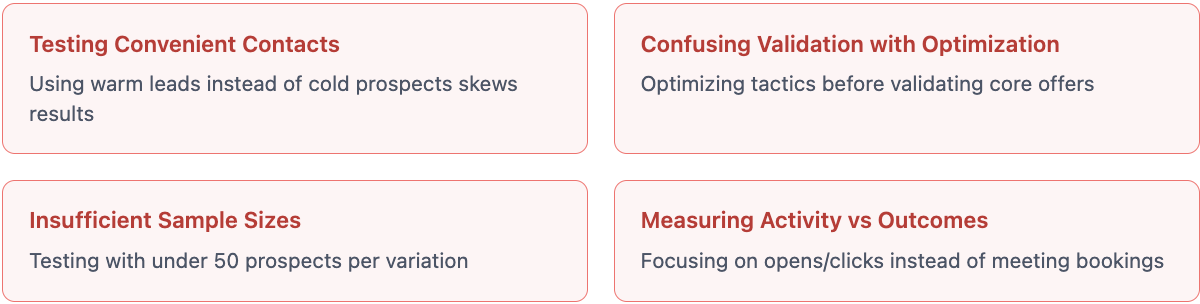

Most offer validation efforts fail because teams make predictable mistakes that undermine the reliability and usefulness of their testing.

Testing with Convenient Rather Than Representative Prospects

Poor validation often results from testing offers with contacts who aren't representative of your actual target market.

Testing with existing connections, warm leads, or prospects who've already shown interest provides skewed results that don't predict broader market response. These contacts might respond positively regardless of offer quality because of existing relationships or previous engagement.

Representative validation requires testing with cold prospects who match your ideal customer profile but haven't been exposed to your previous marketing or sales efforts.

Sample bias occurs when validation groups don't represent the diversity of your actual target market. Testing only with one company size, industry, or geographic region provides limited insights about broader campaign performance.

Confusing Validation with Campaign Optimization

Validation testing addresses fundamental offer effectiveness, while campaign optimization improves execution of validated offers.

Many teams skip validation and jump directly to optimizing subject lines, send timing, or follow-up sequences for offers that don't resonate with prospects. This approach optimizes tactics while ignoring strategy.

Effective validation proves that your core value proposition creates interest before you invest in campaign optimization. Once offers are validated, optimization efforts produce much better returns.

Testing hierarchy should validate offers first, then optimize campaign execution for validated approaches rather than trying to optimize unvalidated offers.

Insufficient Sample Sizes for Reliable Results

Meaningful validation requires testing with enough prospects to distinguish genuine market response from random variation.

Testing offers with 10-20 prospects might provide interesting anecdotal feedback, but results aren't statistically reliable enough to guide campaign decisions. Small sample validation often leads to false positives or negatives that waste resources on poor offers or abandon effective approaches.

Statistical significance typically requires 50-100 prospects per offer variation to provide reliable insights about market response patterns.

Segmentation requirements multiply sample size needs when testing across different prospect types. Validating offers for multiple stakeholder roles or industry segments requires larger overall test groups.

Measuring Activity Rather Than Outcomes

Poor validation metrics focus on email activity rather than business outcomes that matter for campaign success.

Open rates and click rates don't predict whether offers will generate qualified leads or sales opportunities. High engagement metrics with low conversion rates suggest offers create curiosity without driving action.

Outcome-focused validation measures meeting bookings, demo requests, trial sign-ups, or other actions that indicate genuine buying interest rather than casual engagement.

Leading indicator identification helps predict which validation metrics correlate with eventual sales success, enabling more accurate assessment of offer effectiveness.

Stop Guessing About What Prospects Want

Offer validation isn't optional for sales teams that want predictable results from cold email campaigns.

The companies that consistently achieve high response rates and conversion rates don't rely on intuition or best practices. They systematically test and validate their offers with real prospects before scaling outreach efforts.

This approach requires more upfront investment than sending emails and hoping for the best. But validation prevents the much larger costs of failed campaigns, damaged relationships, and missed opportunities that result from unvalidated offers.

Effective validation provides insights that improve not just email campaigns but entire go-to-market strategies. Understanding what value propositions resonate with prospects informs product positioning, competitive strategies, and sales messaging across all channels.

The most successful approach focuses on understanding prospect problems and priorities rather than promoting product features or capabilities. When offers address urgent, expensive problems with compelling solutions, engagement and conversions follow naturally.

Start with systematic validation before optimizing tactics. Identify specific hypotheses about what prospects value most. Test offers with representative prospects who match your target market.

Measure outcomes rather than just activities. Track response quality, conversion potential, and behavioral signals that predict genuine interest rather than casual engagement.

Scale validated approaches systematically rather than hoping that unvalidated offers will work better with more volume or better execution.

Most importantly, treat validation as an ongoing capability rather than a one-time project. Market conditions, competitive landscapes, and prospect priorities change over time, requiring continuous validation to maintain campaign effectiveness.

If you're ready to stop guessing about what prospects want and start validating offers that actually drive results, explore how Databar provides the data foundation and automation capabilities for systematic offer validation that improves campaign performance.

Because in a world where most of cold emails get ignored, validation isn't just helpful.

It's the way to compete.

Frequently Asked Questions

What's the difference between offer validation and A/B testing email campaigns?

Offer validation tests whether your fundamental value proposition resonates with prospects, while A/B testing optimizes execution of already-validated offers. Validation answers "does our offer matter to prospects?" while A/B testing answers "how can we present our offer more effectively?" You should validate offers before optimizing campaign tactics.

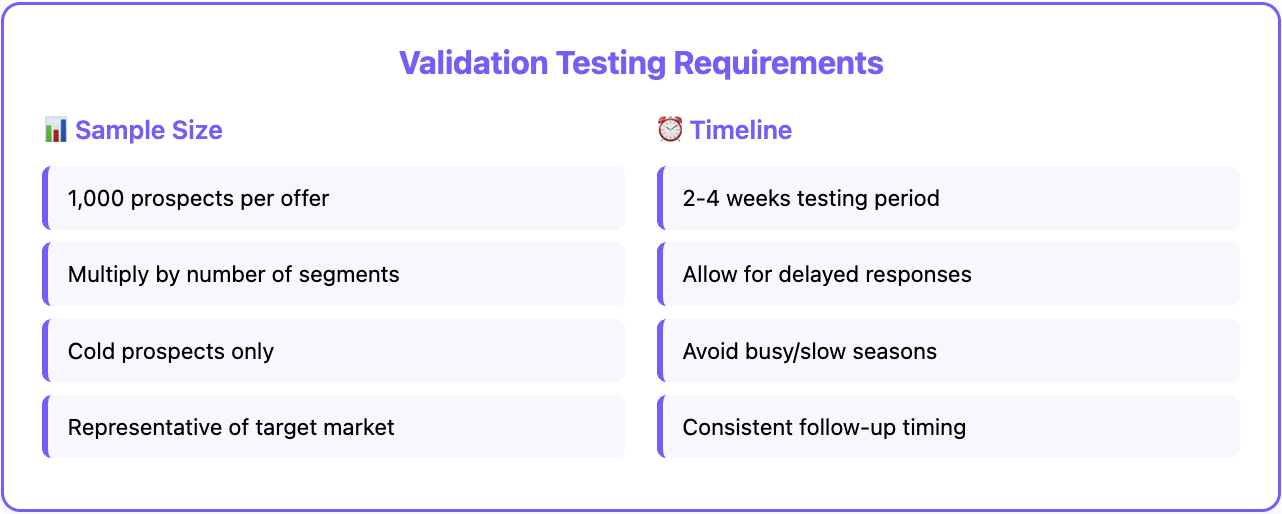

How many prospects do I need for reliable offer validation?

Minimum 600-1000 prospects per offer variation for statistically meaningful results. Testing with fewer prospects provides anecdotal feedback but isn't reliable enough for campaign decisions. If you're testing across multiple segments (different industries or stakeholder types), multiply this by the number of segments for total validation requirements.

How long should offer validation testing take?

Typically 2-4 weeks depending on prospect volume and response timing. Some prospects need multiple touchpoints before responding, so rushing validation can miss delayed positive responses. However, extending testing beyond 4 weeks risks market condition changes that affect results validity.

Can I validate offers without damaging prospect relationships?

Yes, when validation testing provides genuine value and doesn't feel like spam. Focus on small sample sizes, relevant targeting, and professional follow-up that respects prospect time. Poor validation can damage relationships, but systematic validation with quality offers typically improves prospect perception.

Should I validate offers for different stakeholder types separately?

Absolutely. IT decision-makers, finance teams, and end users care about different outcomes and respond to different value propositions. Validating offers separately for each stakeholder type provides more actionable insights than generic testing across all decision-makers.

How do I know if poor results indicate bad offers or bad execution?

Test offers with consistent execution quality across variations. If all offers perform poorly despite good execution (proper targeting, timing, personalization), the fundamental value propositions likely need refinement. If some offers perform well while others fail with identical execution, focus on scaling successful approaches rather than optimizing tactics.

Related articles

Claude Code for RevOps: How Revenue Operations Teams Are Using AI Agents to Fix CRM Data, Automate Pipeline Ops & Build Systems

Using AI Agents to Fix CRM Data and Streamline Revenue Operations for Scalable Growth

by Jan, February 24, 2026

Claude Code for Sales Managers: A Practical Guide to Deal Reviews, Rep Coaching, Pipeline Inspection, and Forecast Prep in 2026

Speed Up Coaching and Forecast Prep with Data You Can Trust

by Jan, February 23, 2026

How to Build a Client Onboarding System in Claude Code for GTM Agencies

How To Cut Client Onboarding from Weeks to Hours with Claude Code

by Jan, February 22, 2026

How to Run Closed-Won Analysis with Claude Code

How Claude Code Turns Your CRM Data into Actionable Sales Strategies

by Jan, February 21, 2026